Cooking Perfection (Day One)

Rejecting tradition in favor of flavor

MY Thanksgiving break was just last week. I hoped it would provide a needed break from my school work and was looking forwards to quality relaxation time with my family. Our goal was partially achieved, until it came to helping my parents make Thanksgiving dinner. My responsibilities were: make the mashed potatoes, and make the pumpkin pie.

Consider them in your mind. Chances are, if you've been exposed to the same general american traditions as the rest of us, you have a general idea of what both of these are supposed to taste like. I claim that I know exactly what both of those dishes taste like. I've tasted them many times and so I know what the average pumpkin pie tastes like; what the average mashed potato tastes like.

The average taste of a dish is not only the truest, most accurate representation of its flavors, but it is the best tasting example of a given dish. Those familiar with the concept of "the wisdom of the crowd" will recognize my example.

Look at the jar filled with candy.

Imagine 1000 people are asked how much candy is in the jar (a common exercise, you may have even participated in such an activity). Chances are, the results will have a high density very close to the actual amount of candies, suggesting that the average value will be very accurate. I'd like to apply this principle to cooking. Instead of picking one recipe and hoping that it's one of the more accurate and tasty ones, we can create an average recipe which should be reliably faithful to the dish's true taste

I got started by opening up a Google Colab notebook. These things allow you to write Python code in your browser and run it from runtimes supplied by Google. It's the same thing as a Jupyter notebook but not hosted locally. I chose Google Colab because:

- My methods of obtaining recipes are of questionable legality (illegal)

- The way that the code is organized into blocks makes it easier to save variables for later use, possibly minimizing my queries

- I can export my notebooks to HTML files to share with you guys!

My progress today was learning how to crawl the Allrecipes website to find recipes, downloading these recipes, and beginning to parse out ingredient lists. I mostly worked in the lasagna field, however I did some tests with ramen recipes as well! I ended up with probably a gigabyte downloaded of various recipes which leads me to my next problem: even though I don't think that they'll trace me back to my IP address, it is very possible that they trace me back to my Google account which is connected to the Google Colab runtimes and I would just hate to have my email account blocked. I might look into creating a burner Google account? Maybe I'll look for off-site server space if I decide I'm going to need a lot of data (terabytes).

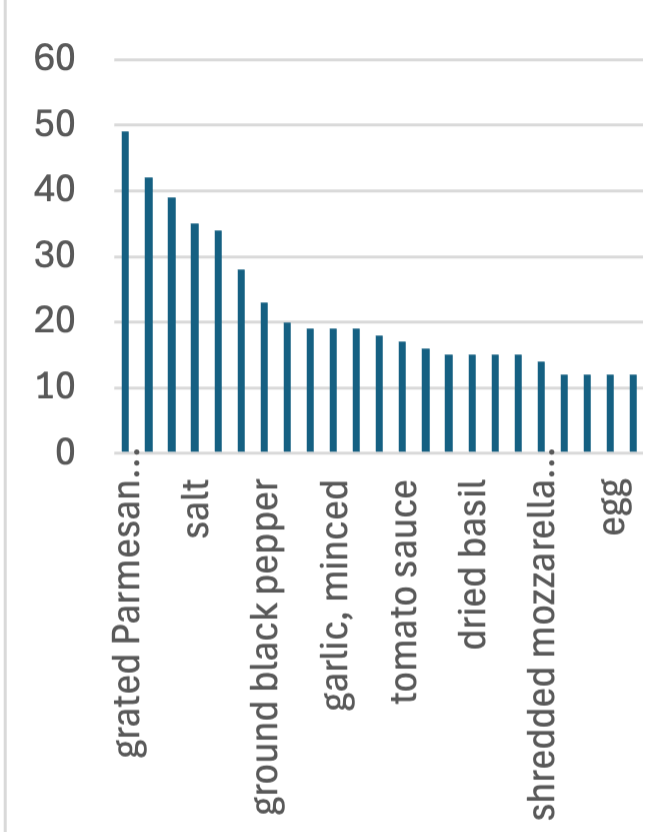

I learned a lot today. The main takeaways were that I need to figure out how to combine identical ingredients whose authors may have listed them slightly differently. Think "ginger" and "fresh ginger" or "salt" and "pinch of salt". I generated a graph of supposedly the most common ingredients in lasagna and its inaccuracies helped me think about ways for me to clean and combine my ingredients data.

Some issues with that graph (which is about 14,000 pixels long; cropped for the website):

- The ingredients aren't normalized to serving size. I.e. 4 eggs for lasagna serving 8 people and 2 eggs for lasagna serving 4 people are functionally the same however currently the former counts twice as much as the latter.

- Not even case sensitive

- The terms "salt" and "pinch of salt" currently recorded separately; shouldn't be.

- Not pictured: about 200 ingredients which have one occurrences. Outliers should be filtered out.

Heres the Google Colab Code:

If that wasn't enough, you can read my obsidian notes on the whole project. This gives a broader idea of where I want to go with this: